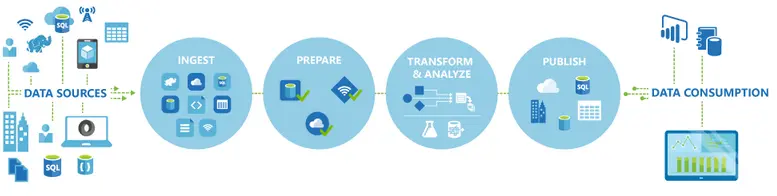

Azure Data Factory is a Microsoft cloud-based data integration service which helps to transfer data to & from Azure Data Lake, HDInsight, Azure SQL Database, Azure Machine Learning (Cognitive Services), Azure Blob Storage etc. It organizes & automates the movement and transformation of data. Azure Data Factory works across on-premises, cloud data sources as well as SaaS to extract, transform and finally load the data.

Pipelines and activities in Azure

Azure Data Factory allows you to create data pipelines that move and transform data, and then run the pipelines on a specified schedule (hourly, daily, weekly, etc.).

What is a data pipeline?

A very attractive feature of ADF is that the service offers a holistic monitoring and management experience over these pipelines In a Data Factory solution, you create one or more data pipelines. A pipeline is a logical grouping of activities. They are used to group activities into a unit that together perform a task. To understand pipelines better, we need to understand an activity first.

What is an activity?

Activities define the actions to perform on your data. For example, you may use a Copy activity to copy data from one data store to another data store. Data Factory supports two types of activities: data movement activities and data transformation activities.

To learn more, click the link and follow aStep by Step Azure Data Factory Tutorials