SharePoint 2010 remains among the most popular document management system for high traffic sites and Fortune 500 corporate environments with large volumes of sensitive documents. Sometimes, managing all those documents gets to be too much, even for SharePoint and document searches can slow to a frustrating crawl. Recently, we solved a fascinating puzzle for one of our corporate clients that began to lose significant of productivity due to slow searches. We invite you to match wits with our best engineers and coders to find out how we solved The Case of the Reluctant SharePoint. As a bonus, note that each section is named for a classic film of mystery and suspense. Score one point for each one that you’ve seen.

The Usual Suspects

Our client was very happy when we deployed SharePoint 2010 across their corporate network. After a couple of migrations, however, end users started complaining on load times when viewing different pages across the SharePoint portal. We led off our investigation by identifying the following areas of concern as possible culprits:

• Custom .Net Code (webparts, workflows, event handlers, etc.)

• SharePoint Server farm architecture

• Database

Code reviews did not provide any leads. Back to the drawing board.

The 39 Steps (Actually, it only took us 3)

For our next line of inquiry, the server architecture & database were verified to identify bottlenecks that could cause the reduced performance.

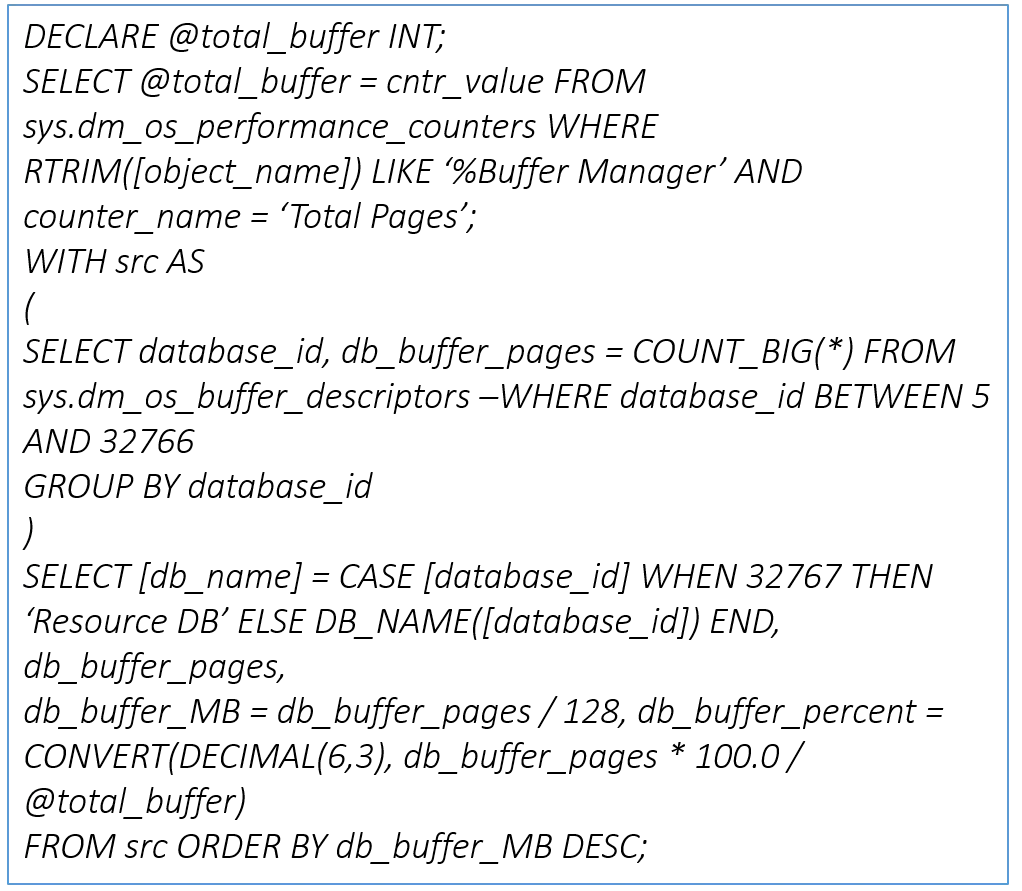

Using PERFMON in database server (for CPU & memory usage), we found that the memory consumption was close to 95%. Something was giving SharePoint a bad headache. We went on to investigate potential memory bottlenecks, starting by checking the buffer memory usage with this SQL query:

Here we found a major clue. This resulting table suggested that the Search Application database was consuming most of the buffer memory.

Operation Bottleneck

What process within the Search Application that was taking up all the buffer? On analyzing & reviewing the Search Application, we made the following observations:

• Incremental crawl frequency (5 minutes) was less lower than the actual incremental crawl time (>30 minutes), leading to a stack of crawls.

• WFE’s were serving User and Crawler HTTP requests.

• Users reported better performance when the incremental crawl was stopped or when incremental crawl frequency was increased to 3 hours, obviously giving the buffer time to clear out.

We implemented the following changes in the Search Application architecture and immediately saw a drastic improvement in overall site performance:

1. Remove one of the servers from load balancing

• Load balanced URL is http://constoso.com/

• Load balanced servers are WFE1, WFE2 & WFE 3

In this ex. WFE 3 is removed from the load balanced solution

2. Keep the “Microsoft SharePoint Foundation Web Application” service running on this server (WFE 3) so that it can serve the HTTP requests

3. Update the Alternate Access Mapping to ensure that the site can be accessed using server name or IP address (http://WFE 3/)

4. Perform these steps redirect crawler traffic to a dedicated front-end web server:

At the Windows PowerShell command prompt, run the script in the following example:

$listOfUri = new-object System.Collections.Generic.List[System.Uri](1)

$zoneUrl = [Microsoft.SharePoint.Administration.SPUrlZone]’Default’

$webAppUrl = “http://constoso.com/” //””

$webApp = Get-SPWebApplication -Identity $webAppUrl

$webApp.SiteDataServers.Remove($zoneUrl) ## By default this has no items to remove

$URLOfDedicatedMachine = New-Object System.Uri(“(http://WFE 3)”) // “)

$listOfUri.Add($URLOfDedicatedMachine);

$webApp.SiteDataServers.Add($zoneUrl, $listOfUri);

$WebApp.Update()

Verify that the front-end web server is configured for crawling by running the following script at the Windows PowerShell command prompt:

$WebApplication=Get-SPWebApplication

$listOfUri = $WebApplication | fl SiteDataServers

Echo “$listOfUri”

If this returns any values, the web application uses a dedicated front-end web server.

When a front-end web server is dedicated for search crawls, run the below script to remove the throttling configuration that would otherwise limit the load the server accepts from requests and services.

$svc=[Microsoft.SharePoint.Administration.SPWebServiceInstance]::LocalContent;

$svc.DisableLocalHttpThrottling=$true;

$svc.Update()

The Golden Spider

Our final step was to reduce the incremental crawl frequency from 3 hours down to 1 hour to retain update relevancy but prevent stacking. How did you do? Did you figure out the mystery before we did? If you’ve had to deal with similar SharePoint problems, we’d love to hear your story. If you have a tough mystery for us to crack, our private investigators are standing by.